Find a Dust-Free Window Using ADQL

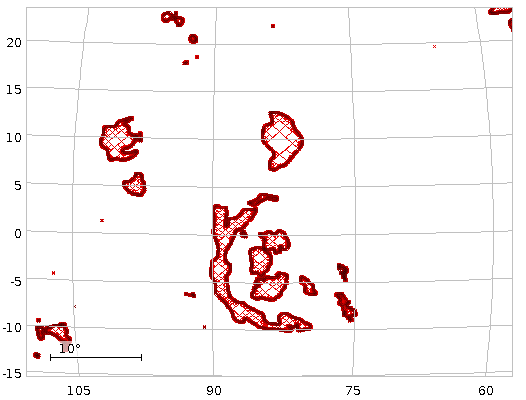

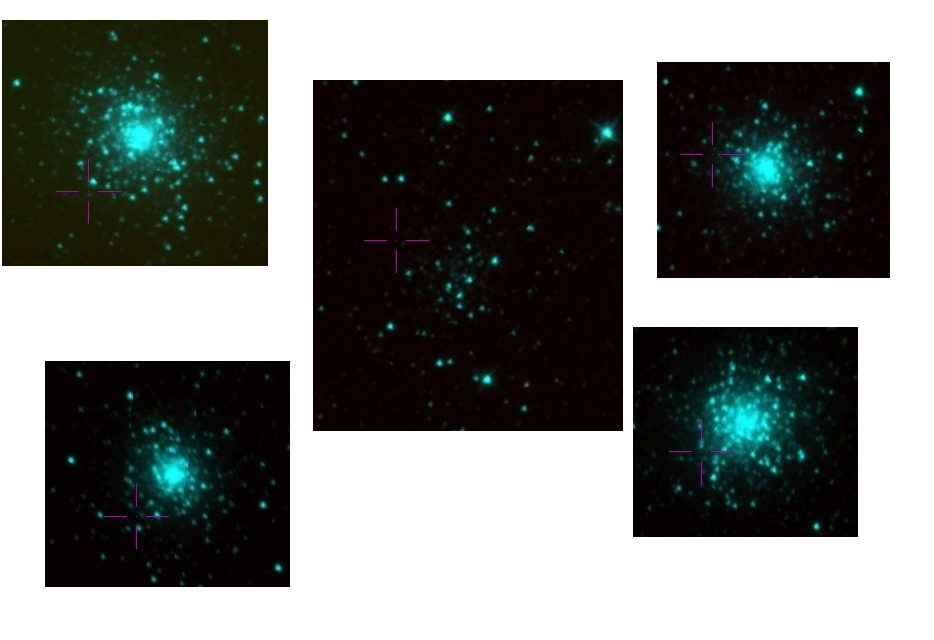

Five of the seven patches of the sky that Bayestar 17 considers least obscured by dust in Aladin's WISE color HiPSes. There clearly is a pattern here. This post is about how you'll find these (and the credible ones, too).

The upcoming AG-Tagung in Bremen will have another puzzler, and while concocting the problem I needed to find a spot on the sky where there is very little interstellar extinction. What looks like a quick query turned out to require a few ADQL tricks that I thought I might show in this little post; they will come in handy in many situations.

First, I needed to find data on where on the sky there is dust. Had I not known about the extinction maps I've blogged about in 2018, I would probably have looked for extinction maps in the Registry, which might have led me to the Bayestar 17 map on my service eventually, too. The way it was, I immediately fired up TOPCAT and pointed it to the TAP service at http://dc.g-vo.org/tap (the “GAVO DC TAP“ of the TAP service list) and went to the column metadata of the prdust.map_union table.

Browsing the descriptions, the relevant columns here are healpix (which will give me the position) and best_fit. That latter thing is an array of reddening E(B − V) (i.e., higher values mean more dust) per distance bin, where the bins are 0.5 mag of distance modulus wide. I decided I'd settle for bin 20, corresponding to a kiloparsec. Dust further away than that will not trouble me much in the puzzler.

Finding the healpixes in the rows with the smallest best_fit[20] should be easy; it is a minor variant of a classic from the ADQL course:

SELECT TOP 20 healpix FROM prdust.map_union ORDER BY best_fit[20] ASC

Except that my box replies with an error message reading “Expected end of text, found '[' (at char 61), (line:3, col:18)”.

Hu? Well… if you look, then the problem is where I ask to sort by an array element. And indeed, it turns out that DaCHS, the software driving this site, will not let you sort by array elements yet. This is arguably a bug, and in all likelihood I will have fixed it by the time your read this. But there is a technique to defeat this and similar cases that every astronomer should know about: subqueries, which turn any query into something you can work with as if it were a table. In this case:

SELECT TOP 30 healpix, extinction FROM ( SELECT healpix, best_fit[20] as extinction FROM prdust.map_union) AS q ORDER BY extinction ASC

– the “AS q“ gives the name of the “virtual” table resulting from the query a name. It is mandatory here. Do not be tempted to leave out the “AS” – that that is even legal is one of the major blunders of the SQL standard.

The result is looking good:

# healpix extinction 1021402 0.00479 1021403 0.0068 418619 0.00707 ...

– so, we have the healpixes for which the extinction works out to be minimal. It is also reassuring that the two healpixes with the clearest sky (by this metric) are next to each other – where there are clear skies, it's likely that there are more clear skies nearby.

But then… where exactly are these patches? The column description says “The healpix (in galactic l, b) for which this data applies. This is of the order given in the hpx_order column”. Hm.

To go from HEALPix to positions, there is the ivo_healpix_center user defined function (UDF) on many ADQL services; it is part of the IVOA's UDF catalogue, so whenever you see it, it will do the same thing. And where would you see it? Well, in TOPCAT, UDFs show up in the Service tab with a signature and a short description. In this case:

ivo_healpix_center(hpxOrder INTEGER, hpxIndex BIGINT) -> POINT returns a POINT corresponding to the center of the healpix with the given index at the given order.

With this, we can change our query to spit out positions rather than indices:

SELECT TOP 30 ivo_healpix_center(hpx_order, healpix) AS pos, extinction FROM ( SELECT healpix, best_fit[20] as extinction, hpx_order FROM prdust.map_union) AS q ORDER BY extinction ASC

The result is:

# pos extinction "(42.27822580645164, 78.65148926014334)" 0.00479 "(42.44939271255061, 78.6973986631694)" 0.0068 "(58.97460937500027, 40.86635677386179)" 0.00707 ...

That's my positions all right, but they are still in galactic coordinates. That may be fine for many applications, but I'd like to have them in ICRS. Transforming them takes another UDF; this one is not yet standardised and hence has a gavo_ prefix (which means you will only find it on reasonably new services driven by DaCHS).

On services that have that UDF (and the GAVO DC TAP certainly is one of them), you can write:

SELECT TOP 30

gavo_transform('GALACTIC', 'ICRS',

ivo_healpix_center(hpx_order, healpix)) AS pos,

extinction

FROM (

SELECT healpix, best_fit[20] as extinction, hpx_order

FROM prdust.map_union) AS q

ORDER BY extinction ASC

That results in:

# pos extinction "(205.6104289782676, 28.392541949473785)" 0.00479 "(205.55600830161907, 28.42330388161418)" 0.0068 "(250.47595812552925, 36.43011215633786)" 0.00707 "(166.10872483007287, 21.232866316024364)" 0.00714 "(259.3314211312357, 43.09275090468469)" 0.00742 "(114.66957763676628, 21.603135736808532)" 0.00787 "(229.69174233173712, 2.0244022486718793)" 0.00793 "(214.85349325052758, 33.6802370378023)" 0.00804 "(204.8352084989552, 36.95716352922782)" 0.00806 "(215.95667870050661, 36.559656879148044)" 0.00839 "(229.66068062277128, 2.142516479012763)" 0.0084 "(219.72263539838667, 58.371829835018424)" 0.00844 ...

If you have followed along, you now have a table of the 30 least reddened patches in the sky according Bayestar17. And you are probably as curious to see them as I was. That curiosity made me start Aladin and select WISE colour imagery, reckoning dust (at the right temperature) would be more conspicuous in WISE's wavelengths then in, say, DSS.

I then did Views -> Activation Actions and wanted to check “Send Sky Coordinates“ to make Aladin show the sky at the position of my patches. This is usually preconfigured by TOPCAT to just work when tables have positions. Alas: at least in versions up to 4.8, TOPCAT does not know about points (in the ADQL sense) when making clever guesses there.

But there is a workaround: Select “Send Sky Coordinates” in the Activation Actions window and then type pos[0] in “RA Column“, and pos[1] in “Dec Column” – this works because under the hood, VOTable points are just 2-arrays. That done, you can check the activation action.

After these preparations, when you click through the first few results, you will find objects like those in the opending image (and also a few fairly empty fields). Stellar clusters are relatively rare on the sky, so their prevalence in these patches quite clearly shows that Bayestar's model has a bit of a fixation about them that's certainly not related to dust.

Which goes to serve as another example of Demleitner's law 567: “In any table, the instances with the most extreme values are broken with a likelihood of 0.567”.

![[RSS]](../theme/image/rss.png)